Reminder on how to run ollama locally and with RAG

Small reminder to myself on how to run my local and cloud ollama LLMs with an a custom document database for RAG.

When possible, and for a number of reasons, I prefer to run LLMs locally. This very short tutorial is for my reference, though likely to be useful to others, on how to do it because I constantly forget.

Install ollama

Very simple:

curl -fsSL https://ollama.com/install.sh | shOr whatever method is described for your system here. I use linux ubuntu becuase I am a bit of nerd.

Download models

Since ollama made some models available for free in the cloud, there is little need to download full models, and your computer won’t suffer as much while prompting an 8b model:

llama3.1:8b runs surprisingly well fully locally in a 16GB RAM laptop, no GPU.

Ollama’s cloud is a new way to run open models using datacenter-grade hardware. Many new models are too large to fit on widely available GPUs, or run very slowly. Ollama’s cloud provides a way to run these models fast while using Ollama’s App, CLI, and API.

and seems to be privacy friendly:

Ollama does not log or retain any queries.

(optional) Sign into ollama

This is only necessary to use the cloud models:

- register to ollama https://ollama.com/

- create an api key https://ollama.com/settings/keys

- set an env variable with the key

export OLLAMA_API_KEY=your_api_key - then run

ollama signin

Install and run a model

The neat thing is that when a model is not available it will be automatically downloaded. For cloud models it’ll be just a pointer but all the regular ollama commands should run as usual.

Anyway, the install mode is the the same:

# a cloud model

# Note the `-cloud` suffix.

ollama run gpt-oss:120b-cloud

# completely local model

ollama run llama3.1:8bThis will start a prompt in the terminal, which can be exited with /bye.

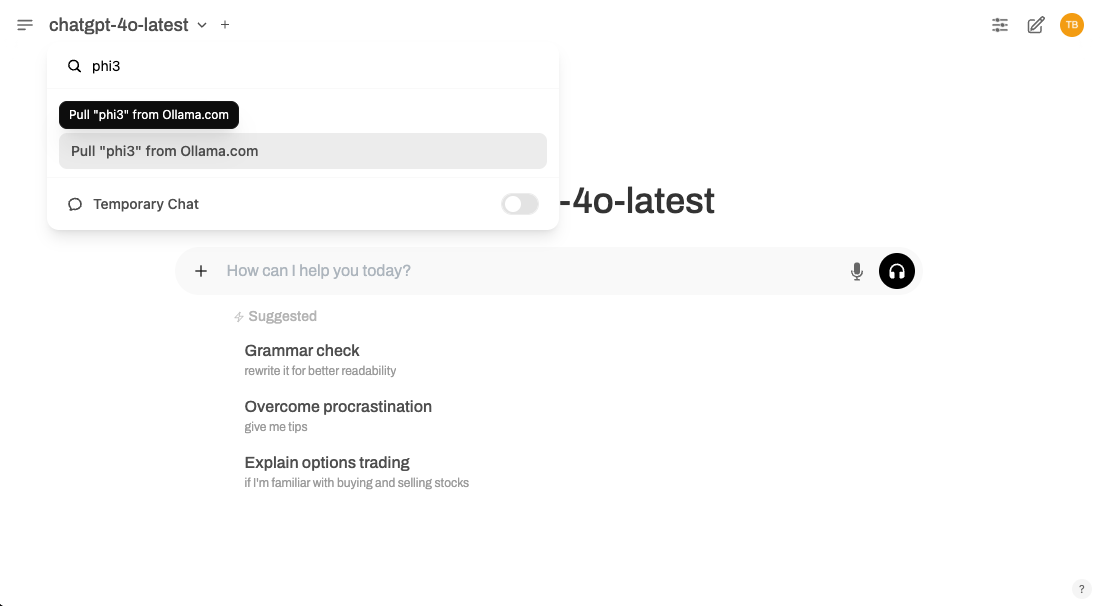

Use a GUI for prompting

Open WebUI is da bomb! Get started with https://docs.openwebui.com/getting-started/quick-start/

I will use a docker image but deploy with Podman because (i) Docker is a pain and (ii) I sometimes try new stuff:

Specially when someone created such great instructions: https://roderik.no/podman-and-open-webui

# assumes podman is installed

# sudo apt-get -y install podman

podman pull ghcr.io/open-webui/open-webui:main

podman run --replace -p 127.0.0.1:3000:8080 --network=pasta:-T,11434 --env WEBUI_AUTH=False \

--add-host=localhost:127.0.0.1 \

--env 'OLLAMA_BASE_URL=http://localhost:11434' \

--env 'ANONYMIZED_TELEMETRY=False' \

-v open-webui:/app/backend/data \

--label io.containers.autoupdate=registry \

--name open-webui ghcr.io/open-webui/open-webui:mainKey flags:

WEBUI_AUTH=Falsebecause otherwise the web interface asks for a login. Ain’t nobody got time for that.--add-hostthis is whereollamais serving it’s API. Nifty.ANONYMIZED_TELEMETRYno tracking--replacebecause everytime I re-start the container I get an error that thename open-webuiis in use. This stops the nagging - it’s an hack not solution, I know.

Now go to your web brower and open http://127.0.0.1:3000/ to see a ChatGPT like interface. I will let you pick the model

Retrieval-Augmented Generation (RAG)

TLDR; a way of using an LLM to query your internal documents increasing the chances of getting more accurate replies and providing the source for an answer. This is where I see a lot of value in LLMs for entities that have a lot of scattered internal knowledge (or a very large scientific paper collection like yours truly).

How to get all this good stuff on your laptop using Open WebUI?

- Upload the documents as a knowledge base.

- Connect the knowledge base to a model.

- Query the knowledge base.

- Profit?

The nity gritty is that the documents are split in pieces of text (tokens) and added to a vector database.

Now a quick showcase:

Task completed.

Reuse

Citation

@online{domingues2025,

author = {Domingues, António},

title = {Reminder on How to Run Ollama Locally and with {RAG}},

date = {2025-11-12},

url = {https://amjdomingues.com/posts/2025-11-12-local-ollama/},

langid = {en}

}